Hawk

A team of Lehigh researchers led by Prof. Edmund B. Webb III, Department of Mechanical Engineering and Mechanics were awarded a 400K grant by National Science Foundation's (NSF) Campus Cyberinfrastructure program to acquire a HPC Cluster, Hawk, to enhance collaboration, research productivity and educational impact.

The proposal team included co-PIs Ganesh Balasubramanian (Mechanical Engineering & Mechanics), Lisa Fredin (Chemistry), Alexander Pacheco (Library & Technology Services), Srinivas Rangarajan (Chemical & Biomolecular Engineering), Senior Personnels Stephen Anthony (Library & Technology Services), Rosi Reed (Physics), Jeffrey Rickman (Materials Science & Engineering), and Martin Takac (Industrial & Systems Engineering).

Acknowledgement

In publications, reports, and presentations that utilize Sol, Hawk and Ceph, please acknowledge Lehigh University using the following statement:

"Portions of this research were conducted on Lehigh University's Research Computing infrastructure partially supported by NSF Award 2019035"

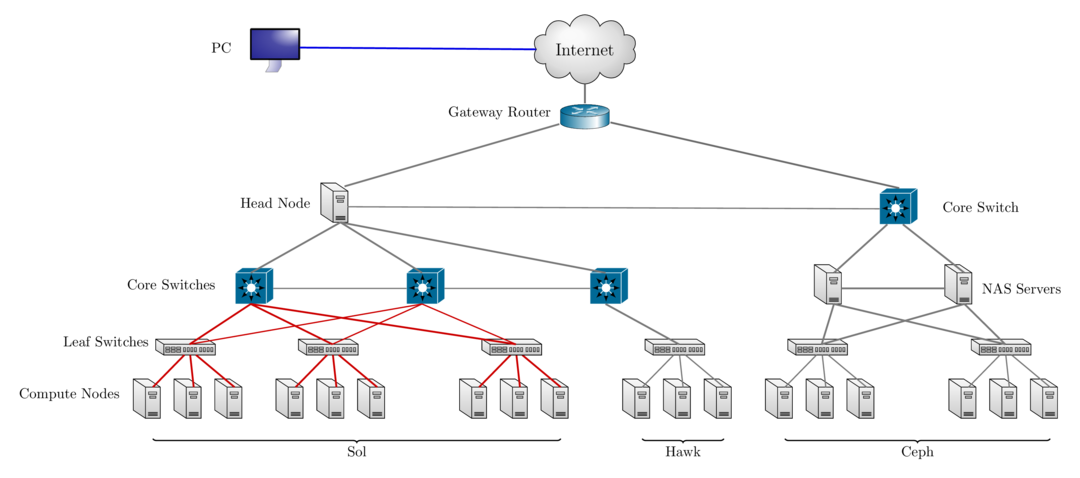

Hawk will share storage, software stack, and login node with Sol. Users will login to Sol (sol.cc.lehigh.edu) and will submit jobs to partitions - hawkcpu, hawkgpu & hawkmem, that service the Hawk compute nodes.

Configuration

Compute

| Regular Nodes | Big Mem Nodes | GPU Nodes | |

|---|---|---|---|

| Partition Name | hawkcpu | hawkmem | hawkgpu |

| Nodes | 26 | 4 | 4 |

| CPU Type | Xeon Gold 6230R | Xeon Gold 6230R | Xeon Gold 5220R |

| CPUs/Socket | 26 | 26 | 24 |

| CPU Speed | 2.1GHz | 2.1GHz | 2.2GHz |

| RAM (GB) | 384 | 1536 | 192 |

| GPU Type | nVIDIA Tesla T4 | ||

| GPUs/Node | 8 | ||

| GPU Memory (GB) | 16 | ||

| Total CPUs | 1352 | 208 | 192 |

| Total RAM (GB) | 9984 | 6144 | 768 |

| Total SUs/year | 11,843,520 | 1,822,080 | 1,681,920 |

| Peak Performance (TFLOPs) | 56.2432 | 8.6528 | 4.3008 |

| HPL (turbo speed) TFLOPs/Node | 2.058 | 2.220 | 1.436 |

Summary

| Nodes | 34 |

| CPUs | 1752 |

| RAM (GB) | 16896 |

| GPU | 32 |

| GPU Memory | 512 |

| Annual SUs | 15,347,520 |

| CPU Performance (TFLOPs) | 69.1968 |

| GPU Performance FP32 (TFLOPs) | 8.11 |

| GPU Performance FP16 (TFLOPs) | 259.2 |

| GPU Performance FP16/FP32 (TFLOPs) | 526.5 |

| GPU Performance INT8 (TOPs) | 1053 |

| GPU Performance INT4 (TOPs) | 2106 |

Storage

| Nodes | 7 |

| CPU Type | AMD EPYC 7302P |

| CPUs/node | 16 |

| RAM/node | 128 |

| OS SSD/node | 2x 240GB |

| Ceph HDD/node | 9x 12TB |

| CephFS SSD/node | 3x 1.92TB |

| Total Ceph (TB) | 756 |

| Total CephFS (TB) | 39.9 |

| Total Storage (TB) | 798 |

| Usable Ceph (TB) | 214 |

| Usable CephFS (TB) | 11.3 |

Differences between Hawk and Sol

There are three major differences between Hawk and Sol

- No infiniband on Hawk, so running multiple node jobs is not recommended.

- LMOD loads a default MPI library. On Sol, mvapich2 is loaded while on Hawk, mpich is loaded.

Please add the following line to your submit script before loading any modules so that applications optimized for Cascade Lake CPUs are loaded. By default, applications optimized for Haswell (head node CPU) are loaded.

source /etc/profile.d/zlmod.sh

intel/20.0.3 and mpich/3.3.2 is automatically loaded on the hawkcpu and hawkmem partitions while intel/19.0.3, mpich/3.3.2 and cuda/10.2.89 is automatically loaded on hawkgpu. These are loaded via the hawk and hawkgpu modules. Load the sol and solgpu modules to switch to the mvapich2 equivalents on Sol and Pavo (debug) cluster after OS upgrade.

To make best use of the hawkgpu nodes, please request 6 cpus per gpu.

Resource Allocation

Policies and procedure for requesting computing time is described in Account & Allocations. The following is the allocation distribution as described in the proposal.

Compute

- 50% will be allocated to the PI, co-PI and Sr. Personnel team (7,673,760)

- 20% will be shared with the Open Science Grid (grant requirement) (3,069,504)

- 25% will be available to the General Lehigh Research Community (3,836,880)

- 5% will be distributed at the discretion of Lehigh Provost (767,376)

Storage

The 11TB CephFS space will not be partitioned but will be combined with the CephFS from the Ceph cluster to provide a 29TB (as of Mar 2021) distributed scratch file system. Of the available 215TB Ceph space,

- 85TB (40%) will be allocated to the PI, co-PI and Sr. Personnel team

- 75TB (35%) will be available to the General Lehigh Research Community

- 30TB (14%) is allocated to R Drive and available to all faculty

- 20TB (10%) will be distributed at the discretion of Lehigh Provost

- 5TB will be shared with OSG

Project Timeline

- Award Announcement: June 5, 2020

- Issue RFP: July 2 or 6, 2020 - Completed

- Questions Due: July 13, 2020 - Completed

- Questions returned: July 17, 2020 - Completed

- Proposals/Quote Due: July 31, 2020 - Completed

- Vendor Selection: August 14, 2020 - Awarded bid to Lenovo

- Delivery: September 30, 2020

- Actual Purchase: Sep 1, 2020

- Actual Delivery: Sep 21, 2020 - Oct 5 (Shipping began 9/17, all items have shipped by 9/28.)

- Rack, cable and power nodes: Scheduled for week of Oct 12

- Install OS

- Scheduler up and running

- Testing Begins: November 1, 2020

- User Friendly mode - co-PI, Sr. Personnel Team: November 12, 2020

- User Friendly mode - everyone else: December 7, 2020

- Production/Commission Date: February 1, 2021

- Target Decommissioning Date: Dec 31, 2025